ACL Rolling Review Proposal

Published on June 19, 2020

Note: We are soliciting feedback from the ACL community on the short- and long-term reviewing proposals. If you would like to provide feedback, please do so here:

The ACL reviewing committee has been working on improvements to the reviewing process and has recently introduced short term changes [1]. What follows below is a proposal for a more long-term transition to a new system for review in *ACL conferences, where reviewing and acceptance of papers to publication venues is done in a two-step process:

- Step 1 -- Centralized Rolling Review: Authors submit papers to a unified review pool with monthly deadlines (similarly to TACL). Review is handled by an action editor, and revision and resubmission of papers is allowed.

- Step 2 -- Submission to Publication Venue: When a conference/workshop/journal submission opportunity comes around, authors may submit already reviewed papers to the conference, workshop, or journal for publication. Program chairs (possibly with the help of senior area chairs) will then accept a subset of submitted papers for presentation.

These two steps already exist in current reviewing processes: reviewers and ACs write reviews and meta reviews, which then get sent to SACs and program chairs for final acceptance decisions. The main difference in this proposal is that the reviews and meta reviews are done in a centralized, rolling process, which provides numerous benefits, as described below.

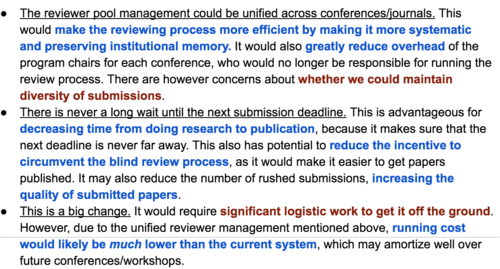

Potential Advantages/Concerns

Potential positive points of this new system are highlighted in blue, concerns are highlighted in red (many of these are covered in detail in the following sections).

Details of Step 1 -- Centralized Review

The centralized review process will consist of submission to a centralized review system that has a large pool of potential “reviewers” (2000-4000). Among these reviewers, some percentage (20%?) who are particularly experienced or senior may also serve as “action editors”, who guide the review process and write meta-reviews for individual papers. There will also be a small number of “editors in chief” who oversee the entire process and handle any special cases (about 10).

Below is an outline of what the first-pass review process may look like on a step-by-step basis. The number of days is an idealized estimate for when the process goes smoothly, and will need to be adjusted on a case-by-case basis.

- Submission: Authors submit a paper to the unified review pool by a fixed deadline (e.g., the first of every month).

- Action Editor Assignment (0 days): A system automatically assesses the paper content, and automatically assigns an action editor from the pool of senior editors. This assignment is based on several criteria: (a) automatically determined content match between the paper content and the action editors’ past publications, (b) lack of COIs, and (c) any requests for a reduced/increased load entered into the system by the editor, and (d) review balance, where an action editor who has recently handled many papers will have a positive balance and thus not be assigned as often, and vice-versa for negative balance.

- Action Editor Confirmation (days 1-4): The action editor will confirm their ability to handle the paper and lack of COIs ASAP. In the case they have COIs or are not capable of handling the paper they will be in charge of selecting another serving action editor among several suggestions provided by the automatic system. If they do not feel that any of the action editors suggested by the system are adequately familiar with the topic, they can suggest an external action editor (who has not already reviewed for the reviewing pool), potentially also aided by a system. If there is no action editor in our community who can handle the paper at all, then the paper can be declined as out-of-scope (see “desk-rejects” below) after confirmation of this fact by an editor-in-chief.

- Desk Rejects and Reviewer Assignment (days 5-7): After the action editor has been confirmed, the action editor will quickly check the paper for any major violations in formatting or other factors and desk reject the paper if it is in violation. The action editor will then be presented with a list of reviewers automatically suggested from the pool by the system based on criteria (a-d) above. The action editor will choose reviewers, using this automatic suggestion as a base.

- Review (days 7-30): The reviewers conduct the normal review process.

- Editor Meta-review and Review Release (days 30-35): The action editor will read the reviews, discuss with the reviewers if necessary, and:

- Summarize the review results in a meta-review.

- Perform a compliance check, checking several boxes confirming “the paper satisfies the formatting requirements (e.g. abiding by the page limit),” “the paper is written in comprehensible English (or any language accepted by *ACL publications),” “the paper is compliant with the ACL ethical code,” “the paper is topically within the scope of at least one *ACL venue.” In the very rare case (e.g. 2-4% of submitted papers?) that a paper does not satisfy these minimal criteria, it will not be allowed to proceed to the next step and may face a moratorium on resubmission for a certain period of time.

- Next Steps: Assuming the paper passes the compliance check, the authors may then either:

- Revise and request another round of review: If the authors are not yet satisfied with their review results, they may revise the paper to reflect reviewer comments, write an author response, and return to the beginning of Step 1. In this case, the paper will usually go to the same action editor and set of reviewers (with some exceptions, see detailed discussion below), and the previous reviews and the author response to them may be attached, as is currently done in TACL.

- Submit to a publication venue: Proceed to Step 2 below, submitting the paper, with reviews and meta-reviews attached, to a conference/workshop/journal.

Notably, in the ideal case, this allows for a decision within a little bit over a month. Based on this, if the authors want to revise their paper, they have a little less than a month to do so before the next deadline for minor revisions, and can spend some more time if they so choose (up to some reasonable time limit, e.g. 9 months, to prevent the article from losing relevance and the old reviews from becoming out-of-date).

Details of Step 2 -- Submission to Publication Venue

The steps for Step 2, submission to a publication venue, are as follows:

- Call for Submissions (-2 months or so): The publication venue would notify the community that they will be accepting submissions, and that papers that have been reviewed (and satisfy the compliance criteria) can be submitted at this time.

- Submission Deadline (0 days): Authors will submit reviewed papers to the venue if they wish their paper to be considered for publication there.

- Selection Process (2-6 weeks): The program chairs, possibly aided by area chairs, will sort through the already-reviewed submissions and build the program for the conference. Similarly to how program chairs do now, they will select the papers to be presented based on a combination of numerical scores, reviews and meta-reviews by the action editor, optional discussion between the PCs and the action editor, and consideration for diversity/direction of the program.

- Post-Selection: Selected papers will be presented in the conference/workshop/journal. Authors of papers that are not selected can either submit them to the next publication opportunity, or can revise and resubmit the paper in an attempt to improve the reviews.

Frequently Asked Questions/Concerns

Handling of Blind Review

During step 1, the centralized review process, papers will be blind according to the ACL review policy (for simplicity, we are not considering any changes to the blind review policy in this proposal).

During step 2, consideration for presentation at a publication venue, the process could either be blind or not blind. Currently, in many conferences the authors are not blind to program chairs, and either blind or not blind to area chairs, but this policy could be adjusted appropriately. One advantage of not requiring papers to be blind in step 2 is that this would free the authors to make their papers public after they are satisfied with the results of step 1 (i.e. they have good reviews and are likely to be accepted to a venue of the authors’ choice).

In addition, the *ACL may optionally consider establishing a centralized public blind (or after review, de-blinded) repository of papers submitted to the review process. This repository would be opt-in, so authors would be able to decide whether they want their papers to be made public during review. This would have the advantage of allowing authors who want their paper to be made available to do so in a way that still preserves blind review.

Handling of “Areas” and Ensuring Diversity in Scientific Content

There are a few concerns regarding how a monolithic reviewing process would make changes to the current system:

Areas: In conferences we currently have “areas” and “area chairs”, but in journals such as TACL (as well as other conferences such as ICLR) we just have “action editors” who handle each paper individually. How would this be resolved? Also, what are the selection criteria that program chairs use for selecting papers?

Diversity: An *ACL conference still has autonomy to put together its own program. But this autonomy is greatly reduced because papers can only be drawn from the common pool, which is produced by a monolithic reviewing system.

Answer: For the review process, we may not need “areas”, rather just make sure we assign a competent action editor and set of reviewers, which would be area-independent. In the second step of deciding which papers are presented at a particular conference, then giving the program chairs guidance, but also freedom in their criteria, may be the best way. For example, program chairs can choose to use areas if they wish, but could also choose some other mechanism of ensuring quality and diversity of the scientific program.

- Day-by-day diversity of the papers submitted to the rolling review process: The biggest risk here would be that people would choose not to submit papers to the reviewing pool because they think they would not be appreciated. This is probably linked to the perceived quality of the reviews they get, which is linked to the issues below.

- Day-by-day capacity to have competent *action editors* handle a diverse set of papers: The pool of action editors will be relatively big, and in the current design the action editors will be automatically assigned. As noted above, if the automatically assigned action editor cannot sufficiently handle the paper they can be tasked with finding a new editor, ideally within, but perhaps even outside of the standing action editor pool.

- Day-by-day capacity to have competent *reviewers* handle a diverse set of papers: Once the action editor is chosen, they will presumably be an expert in the field. They can then use the automated system to find a list of suggested reviewers, and choose them based on their expertise (as well as reviewing load, see below). If there are no reviewers currently within the reviewing pool, they can invite external reviewers to the reviewing pool (possibly suggested by a similar mechanism to the one mentioned above for action editors).

- Ability of program chairs to encourage participation of action editors, reviewers, or authors in certain thematic areas: Note that the program chairs will always have some discretion with respect to how they build the program of the conference, as they do now. Let's say a conference wants to have a theme session. The simplest way to achieve this is an announcement to the effect of "there will be a theme on XXX at ACL 2022, please submit papers to the reviewing pool on these topics!" If the reviewing pool already has the capacity to review these papers, then nothing needs to be changed. However, if the reviewing pool is lacking in editors or reviewers in these areas, then the above mechanisms could be used to add editors or reviewers accordingly.

How to Transition to the System?

Related venues will need to agree to use the system and also transition to using it seamlessly, how do we ensure venues agree to use the system? For major conferences (e.g. ACL, EMNLP, NAACL, EACL, AACL) and potentially also major journals (TACL), there are two potential options: complete adoption and partial adoption. In complete adoption, all submissions would be handled through the two-step rolling review system. In a partial adoption, the venues could run their own review process, but additionally accept submissions from the centralized reviewing pool. Complete adoption would likely be more efficient/fair, but also require a decision from the ACL executive board to switch over to this new system and mandate this of the program chairs. One option would be to do a partial adoption for a few years, then move to a complete adoption.

For more focused workshops or other non-major conferences in the ACL anthology sometimes the character of venues is very different, and it is necessary to ensure that people still have flexibility and don’t feel constrained. Focused workshops may prefer to partially adopt the system and still have their own reviewing pool, but other workshops may also prefer not to have to handle reviewing themselves, as it is a major burden.

Measuring “Acceptance Rate”

There are some organizations that measure the quality of conferences based on the acceptance rate of papers submitted there. This can be important for career advancement, so it is important to have an acceptance rate that appropriately represents the difficulty of publishing a paper in the conference. A few ideas for measuring acceptance rate for a particular venue are below:

- Accepted Papers / Presentation Requests for Conference or Journal: This is simple, but may face problems if authors self-select, not requesting a presentation if their reviews are not good. Thus the acceptance rate could seem artificially high, which would make the venues seem less selective than they actually are.

- Accepted Papers / Papers in the Review System: Another option would be to divide the number of accepted papers by the number of papers in the review system and eligible to make a presentation request at any point. This would give a low acceptance rate, because all papers submitted to ACL-related venues would be in the system making the denominator quite large.

- Accepted Papers / Some Fraction of Papers in the Review System: We could take a middle-ground strategy between the two. For example, upon submission have authors select a preferred venue (ACL/EMNLP/NAACL), or preferred type of venue (Conference/Journal/Workshop). We could then use the fraction of the papers that selected a particular venue as preferred as an indication of that venue’s popularity, and calculate the denominator based on these statistics. Alternatively, we could use all papers in the system that selected a particular type of venue, e.g. “Conference”, in the denominator when calculating conference acceptance rates (and similarly for journals).

- Compare Accepted Paper Scores to Full Submission Pool Scores: As another option, we could keep track of the overall distribution of numerical review scores in the system and the distribution for each conference. Then, we calculate an estimation of the review score necessary to be accepted at the conference, and find its percentile in the overall review pool. To take a made-up example, let's say that the median overall review score of papers accepted to ACL was 4.1, then and only 16% of papers in the overall review pool had scores 4.1 or higher. In this case, the acceptance rate for ACL would be reported as 16%. Instead of the median for each publication venue, we could also take the 10th or 20th percentile, representing the lower end of the venue’s accepted range.

Other Fundamental Comments/Concerns

- How to avoid reviewer burnout?: Some people don’t want to be reviewing constantly, all the time, how do we prevent this in a rolling review system?

- Answer: As noted above, we can have a centralized system that keeps track of “review balance” how much reviewing people have done and tries to distribute it equitably. In addition, some people prefer to review many papers at a time as opposed to a few every month. Thus, at the beginning of the monthly reviewing cycle, we could give people a chance to bid on papers that they would like to handle, and if they want to do a lot of reviewing/chairing that month so they don’t have to do much other months, they can bid to take several papers, increasing their review balance so they don’t have to do reviewing in succeeding months. In addition, we may add the ability of reviewers to indicate that they are on vacation or otherwise unavailable.

- Would the “revise and resubmit” option favor boring work or lengthen the review process?: There are concerns that a “revise and resubmit” option favors more conservative work, particularly if the reviewers are the same through multiple rounds of review. In addition, review can take longer.

- Answer: Regarding lengthening the review process, the authors are given an option to submit their work for presentation as soon as they like, so they can conclude the review process at a point where they choose once they have received initial reviews and the paper has been judged compliant. In addition, the authors can appeal for a different set of reviewers if they feel that their reviews were unfair or of poor quality. A central review process would also allow us to collect statistics and adjust as we learn more about the trends.

- Papers being repeatedly rejected by conferences: What if in a "pick-from-the-pool" system, program chairs would have more leeway not picking that paper -- after all, they are not rejecting the paper, they are just making it available to other conferences/journals?

- Answer: This is also the case in the current system (often papers are rejected because they're "not ready yet"), but there is some chance that the system proposed here may encourage this further. One potential fix would be to encourage PCs to not pass over highly-rated papers too many times unless there is a really good reason. Because most PCs believe in the values of fairness, this could potentially favor highly-rated papers that have been passed over in the past.

For papers that are not so highly rated, the authors have several choices (analogous to the choices they have in the current system): (a) revise the paper in an attempt to improve the review ratings, (b) lower their standards for where they present their papers, such as submitting to a workshop instead of a conference, (c) if we implement a centralized public repository of under-review or deblinded papers such as the “Findings of the EMNLP” or “ACL Archive”, allow the paper to remain there without being presented at any conference.

Other Logistics Comments/Concerns

- Retraction: There is not currently a mechanism for retracting papers from the ACL anthology, and hence there is no mention of this in the proposal.

- Answer: Having a mechanism for retracting papers is probably important, but somewhat orthogonal to this proposal, and could potentially be decided by the ACL Exec separately.

- Publishing Fees: TACL is an edited journal, and it costs money for the ACL to publish papers there, which is a concern if we move to something similar in the new system.

- Answer: It is likely that the majority of authors will still want to present their papers in conferences, like our system now, so this may not be an issue. However, if transitioning to a monthly review system causes a large increase in the number of submissions to edited journals, we may have to re-consider. One option would be to make these journals more selective, and have them represent the cream-of-the-crop of *ACL work, which may not be a bad thing.

Acknowledgements

This document was drafted by Graham Neubig and revised by the ACL Reviewing Committee: Amanda Stent, Ani Nenkova, Anna Korhonen, Barbara Di Eugenio, Hinrich Schuetze, Iryna Gurevych, Joel Tetreault, Marti Hearst, Matt Gardner, Nitin Madnani, Tim Baldwin, Trevor Cohn, Yang Liu.

Thank you to the ACL Executive Committee for additional feedback.