2021Q3 Reports: Reviewer Mentoring Program Chairs

ACL2021 Reviewer Mentoring Committee Co-Chairs Report

Jing Huang, Antoine Bosselut, Christophe Gravier, July 15, 2021

Introduction

ACL2020 produced a first effort to introduce a mentoring program for young researchers. As stated in ACL2020 co-chairs report [1], its origin and motivations are as follows: “Given the rapid growth of NLP in terms of number of papers and new students, it is very important for our community to mentor and train our new reviewers. ACL2020 has launched a pilot program which calls for each AC to mentor at least one novice reviewer. Ultimately, the goal is to provide long-needed mentoring to new reviewers. At the very least, this process will inform ACL on constructing a reviewer mentoring program that is more scalable in the future.” This initiative paved the way to a renewed effort at ACL2021 with the creation of a new Reviewer Mentoring Committee Co-Chairs role.

We built upon this previous experience and did our best to push further the reviewer mentoring service offered to the community. We especially focused our efforts on tackling issues that were raised previously which are recommendations as follows (in extenso for reference, from the same report):

- R1: Establish a better mentor-mentee matching system. For example, instead of selecting mentees based on their review experience, we could have junior reviewers enroll themselves in the mentoring program. This would lead to more motivated mentees which will ensure more productive follow up.

- R2: Set up guidelines to help mentors engage in mentoring activities, e.g., what needs to be done, what is expected from the mentees.

- R3: Establish a dedicated role of “mentoring chair” to organize and coordinate mentoring efforts. This has therefore been implemented as we were enrolled as co-chairs for this community effort.

- R4: Improve infrastructure and communication channels. Most of the communication related to the mentoring program was done using google doc, spreadsheet, and email, which made it cumbersome to manage and keep track of progress. An improved infrastructure (e.g., as part of the Softconf system) will help.

- R5: Build a database of papers and examples and create tutorials with examples that can be helpful for new reviewers. This can also provide tools for mentors when they engage with mentees in one-to-one interaction.

Preliminary survey to ACs and SACs

We describe here the preliminary works that we have done in order to decide how to best improve the existing system. Last year's report had been a great resource as a starting point (see introduction). In accordance with our program committee co-chairs, we then decided to conduct a Reviewer Mentoring Program Survey targeting ACL2021 ACs and SACs about their expectations on the reviewing process. This served two objectives. First we wanted to collect feedback on how ACs and SACs perceive the reviewing process in general so that it can help first time reviewers to better understand what is expected when they review. Second, we wanted to listen and collect ideas from the community to serve it best.

The results were made publicly available on ACL2021 website [2]. Helped by our program committee chairs, we reached all SACs and ACs so that 130 Senior Area Chairs and Area Chairs (24 SAC and 104 ACs) answered this survey. The analysis of those feedbacks was sent as a link in a dedicated email to first-time reviewers (we will describe later in this report the enrollment en communication process). We do believe that this survey result goes beyond the review mentoring process and it is a very insightful resource for the community.

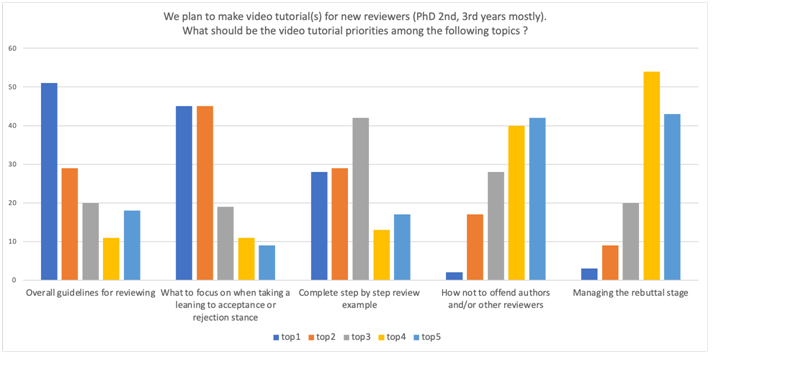

Among many questions, one was dedicated to guide our effort on creating online tutorial content. Crafting online video content was our answer to recommendation R5 presented above (Build a database of papers and examples and create tutorials with examples that can be helpful for new reviewers), which also gained a certain traction during the pandemics and first-time researchers being mostly isolated at home. We ask the following : “We plan to make video tutorial(s) for new reviewers (PhD 2nd, 3rd years mostly). What should be the video tutorial priorities among the following topics?”. We received the following feedback:

Most of the feedback focused on videos content to help reviewing in general, scoring and illustrative examples. We used that input to drive our effort in what follows. The entire survey result is publicly available [3].

Reviewer mentoring service organization

Following the recommendation R1 (Establish a better mentor-mentee matching system), we transition from a mandatory to a half mandatory / half opt-in enrollment in the reviewer mentoring service for first-time reviewers. The idea was to get in touch with first-time reviewers early, and ask them to access online textual and video content that we put together for them. This step was mandatory for first-time reviewers, although we have no control on whether a first-time reviewer did or did not watch them, besides overall watching statistics). Then, still before the conference deadline, they would opt-in for the reviewer mentoring service, which involved first-time reviewers to have a single paper to review. We have then proceeded to match mentees and mentors, trying to optimise the fact that mentees are matched with ACs in the same track as much as possible. They were matched thanks to the help of SACs (we will provide details on that process later in this report). Then, first-time reviewers were asked to respect a specific review deadline (ten days before the other reviewers deadline), so that the mentee/mentor discussions can happen.

The most tedious part is probably the matching of mentors with mentees. We did receive some complaints from ACs to lower or remove them from this burden (very few overall, nearly all ACs accepted and served as mentors and for that we are really thankful to them -- the reviewer mentoring service cannot happen otherwise). The real main issues/efforts were:

- The need to provide a communication channel between mentors and mentees. We would have liked to use Softconf so that mentors and mentees could discuss their paper online and anonymously, without access to their discussion by other reviewers. With the help of our Program Committee co-chairs, we were not able to find a working solution. We instead used manual matching maximising same track mentees/mentors pairs, and then put mentees and mentors in contact using emails. We created a template and sent a batch of emails to each mentee/mentor pair to introduce each other.

- The significant effort to create video content befitting and dedicated to ACL, which we will describe in the following section.

The pool of first-time reviewers was identified from the reviewer enrollment form which is asking experience to the reviewers who are applying. We started with 184 first-time reviewers at a *CL event distributed as follows:

- 17 reviewers in tracks who declared "First time reviewing for anything"

- 167 reviewers in tracks who declared "First time reviewing for a *CL event but have reviewed before"

For this entire mentoring process, we set up and applied the following agenda, which was sent to all 184 first-time reviewers we identified. We reproduce here that agenda sent to first-time reviewers, so that it could give precise information on the different timings that we implemented in hope that this would help upcoming reviewer mentoring co-chairs in the future.

Stage 1 (Feb 1-12, 2021) - Mandatory Watching videos and optionally signing up for one-on-one mentoring program (a) The full-paper submission deadline is Feb 1, and the review period starts around Feb 25, 2021. (b) The mentoring committee will release reviewer tutorial videos and other optional reviewing materials around Feb 1. (c) All the first-time reviewers are required to watch the videos during Stage 1. (d) If after watching the videos and going over the optional materials, reviewers want to be paired with a mentor to further improve your reviewing skills, they can sign up for Stage 2 of the program (aka the one-on-one mentoring). The deadline for signup is Feb 12. Note that the participation in Stage 2 is on a voluntary basis. (e) The mentoring committee will then, with the help from SACs, pair up the reviewers with mentors before the review period starts on Feb 25.

Stage 2 (Feb 25 - March 20) - Opt-in One-on-one mentoring during the review period This step is on a voluntary basis. If reviewers participated in this stage, they needed to complete one of their reviews by March 10, ten days before the normal review due date. The SACs selected beforehand which review would be written by the reviewer and reviewed by the mentor. To ease the reviewing burden, the review was selected for a paper that both the mentee was reviewing as part of their duties to the conference and the AC was meta-reviewing. Mentors read reviews and provided feedback. Reviewers then revised their reviews based on the feedback. Mentors read the revision and made further suggestions as needed.

For step 1d, we created an online form [4] and sent it to all enrolled first-time reviewers. Though we had self-declared 184 first-time reviewers to *CL conferences, 198 asked to be enrolled in the mentee/mentor part of the reviewer service. Some reviewers who were not first-time also enrolled given the opportunity, which stressen the impact and importance of this mentoring initiative.

ACL 2021 new tutorial contents We focus a significant part of our effort to create new textual and video content for first-time reviewers.

For the new text contents, we especially maintained (with the help of the Website co-chairs) a new dedicated page for reviewer mentoring program [5]

For the new video contents, we and some invited colleagues self-produced the following videos:

- A first overall video on how to review on an illustrative example (recommendation R5). This video was produced by Dr. Ievgen Redko, Associate Professor at Université Jean Monnet and several times awarded as best reviewer in major AI conferences. This short video is about 15 minutes long. It has been viewed more than 1,500 times at the time of writing. It covers how to process a paper, what to look for and how to write down your review, based on an illustrative example. Along with the review is a blog post (which was made before we were serving for ACL 2021 but actually led us to ask I. Redko to record his thoughts). The blog post was also online and provided to first-time reviewers.

- A set of mini-videos with a very narrow focus (max 5 minutes):

- The reviewer mentoring program by Jing Huang ACL2021 reviewer mentoring co-chair.

- The review form overview, by Antoine Bosselut ACL2021 reviewer mentoring co-chair,

- The ethics questions on the review form by Xanda Schofield, Ethic Advisory Committee (EAC) of ACL 2021 co-chair,

- The reproducibility questions on the review form by Christophe Gravier ACL2021 reviewer mentoring co-chair,

Since YouTube was not an option (it is not available in all countries), we asked the help of Social Media co-chairs for creating a ACL Vimeo channel that would host these videos. The promotion of the videos were made on the conference website, via emails and on social networks as well. All videos are available at [6]. The video from Dr. Redko was however first posted on Youtube [7] (before we switched to Viemo for the aforementioned reason).

This year reviewer mentoring service in a nutshell

- ACL created co-chairs service for review mentoring program;

- The reviewer mentoring service became a two-step process, a first mandatory one for first-time reviewers, a second made of one-to-one mentoring as opt-in service;

- We asked ACs and SACs on what they see as important when reviewing and video materials needed for first-time reviewers;

- We created a dedicated public channel for communication with the community on the ACL 2021 website, with public updates on the reviewer mentoring process along the months of prior and after submissions deadlines;

- We create several tutorial online videos on how to review and address the rebuttal. We expect these videos to be useful for future editions as well. This goes along with the creation of the ACL Anthology Vimeo channel for that purpose;

- We worked closely with the Program co-chairs but also the Website and communication co-chairs for dissemination of information about the reviewer mentoring service on the website, social media, the conference forum, and the newly created vimeo ACL account for the video tutorials that we created. Interactions with the EAC were also very fruitful for the video on The ethics questions on the review form.

What can be done in the future?

We implemented most of the recommendations expressed in last year's report reviewer mentoring report. However, we struggled and were unable to find a satisfactory solution to the recommendation R4 (Improve infrastructure and communication channels). We were not able to tune Softconf to our needs as suggested, and falled back to emails and online shared documents (as explained previously). The communication process with first-time reviewers was improved until we reached the point of initiating mentee/mentor matchings. What is downstream of this milestone step remains difficult to organize.

In addition, a few things can be improved for the next reviewer mentoring program:

- Post-mentoring survey. We did our best to fulfill our duty and we think that we contribute to move this community effort forward. We nonetheless regret that we were not able to organize a post-reviewing survey towards mentors and mentees in this special year. This had been done last year, and so should be done each year. Creating a survey and making it available for the next co-chairs could ease the process (decrease workload and make results more comparable if the same or to be the same).

- In a non-Covid situation, we would have been happy to organize a semi-formal event at the conference itself with first-time reviewers.

- Improve SACs and ACs participation earlier in the process.

- ACs were not aware that being an AC automatically enrolled them as mentors in the ACL 2021 reviewer mentoring program. Consequently, a few mentors dropped out once mentoring assignments were made. Certain mentees could not be easily re-assigned to other mentors and were not able to participate in the program. Making it explicit to ACs that enrollment in the mentorship program was a precondition of being an AC would alleviate future misunderstandings.

- SACs made adjustments to reviewer and meta-reviewer (AC) paper assignments after mentor matches had been made. To ease the burden of mentorship, we had set the constraint that the paper being reviewed as part of the mentor program should be a paper the mentee was already reviewing as part of their conference duties and one that the AC was meta-reviewing. Consequently, when adjustments were made to reviewer or meta-reviewer assignments, the matchings often became outdated. Certain mentees could not easily be re-assigned and could not participate in the program. Coordination with SACs to freeze paper assignments that were linked to the mentoring program would diminish the possibility that certain assignments would fall through.

- Some first-time reviewers may feel the need to discuss online to be better prepared about reviewing (watching video is a definite help, but an online open meeting for first-time reviewers to ask questions before sending them the opt-in form could make them feel more secure / helped).

Final words

We are truly thankful for having the opportunity to contribute to the review mentoring effort and make it grow. We are really grateful for the trust, time and efforts offered by our Program Committee co-chairs. We especially thank Prof. Fei Xia for her availability and support for the many online discussions, and Roberto Navigli for the Python magic that was involved so that we were able to match mentees to mentors. We would be happy to help next year co-chairs at the beginning of this service in order to pass along our experience if needed.

List of additional resources

Rationale for the reviewer mentoring program [8]