2018Q3 Reports: Program Chairs

Program Committee

Organising Committee

General Chair

- Claire Cardie, Cornell University

Program Chairs

- Iryna Gurevych, TU Darmstadt

- Yusuke Miyao, National Institute of Informatics

Workshop Chairs

- Brendan O’Connor, University of Massachusetts Amherst

- Eva Maria Vecchi, University of Cambridge

Tutorial Chairs

- Yoav Artzi, Cornell University

- Jacob Eisenstein, Georgia Institute of Technology

Demo Chairs

- Fei Liu, University of Central Florida

- Thamar Solorio, University of Houston

Publications Chairs

- Shay Cohen, University of Edinburgh

- Kevin Gimpel, Toyota Technological Institute at Chicago

- Wei Lu, Singapore University of Technology and Design (Advisory)

Exhibits Coordinator

- Karin Verspoor, University of Melbourne

Conference Handbook Chairs

- Jey Han Lau, IBM Research

- Trevor Cohn, University of Melbourne

Publicity Chair

- Sarvnaz Karimi, CSIRO

Local Sponsorship Chair

- Cecile Paris, CSIRO

Local Chairs

- Tim Baldwin, University of Melbourne

- Karin Verspoor, University of Melbourne

- Trevor Cohn, University of Melbourne

Student Research Workshop Organisers

- Vered Shwartz, Bar-Ilan University

- Jeniya Tabassum, Ohio State University

- Rob Voigt, Stanford University

Faculty Advisors to the Student Research Workshop

- Marie-Catherine de Marneffe, Ohio State

- Wanxiang Che, Harbin Institute of Technology

- Malvina Nissim, University of Groningen

Webmaster

- Andrew MacKinlay(acl2018web@gmail.com), Culture Amp / University of Melbourne

Area chairs

- Dialogue and Interactive Systems:

- Asli Celikyilmaz Senior Chair

- Verena Rieser

- Milica Gasic

- Jason Williams

- Discourse and Pragmatics:

- Manfred Stede

- Ani Nenkova Senior Chair

- Document Analysis:

- Hang Li Senior Chair

- Yiqun Liu

- Eugene Agichtein

- Generation:

- Ioannis Konstas

- Claire Gardent Senior Chair

- Information Extraction and Text Mining:

- Feiyu Xu

- Kevin Cohen

- Zhiyuan Liu

- Ralph Grishman Senior Chair

- Yi Yang

- Nazli Goharian

- Linguistic Theories, Cognitive Modeling and Psycholinguistics:

- Shuly Wintner Senior Chair

- Tim O'Donnell Senior Chair

- Machine Learning:

- Andre Martins

- Ariadna Quattoni

- Jun Suzuki Senior Chair

- Machine Translation:

- Yang Liu

- Matt Post Senior Chair

- Lucia Specia

- Dekai Wu

- Multidisciplinary (also for AC COI):

- Yoav Goldberg Senior Chair

- Anders S?gaard Senior Chair

- Mirella Lapata Senior Chair

- Multilinguality:

- Bernardo Magnini Senior Chair

- Tristan Miller

- Phonology, Morphology and Word Segmentation:

- Graham Neubig

- Hai Zhao Senior Chair

- Question Answering:

- Lluís Màrquez Senior Chair

- Teruko Mitamura

- Zornitsa Kozareva

- Richard Socher

- Resources and Evaluation:

- Gerard de Melo

- Sara Tonelli

- Karën Fort Senior Chair

- Sentence-level Semantics:

- Luke Zettlemoyer Senior Chair

- Ellie Pavlick

- Jacob Uszkoreit

- Sentiment Analysis and Argument Mining:

- Smaranda Muresan

- Benno Stein

- Yulan He Senior Chair

- Social Media:

- David Jurgens

- Jing Jiang Senior Chair

- Summarization:

- Kathleen McKeown Senior Chair

- Xiaodan Zhu

- Tagging, Chunking, Syntax and Parsing:

- Liang Huang Senior Chair

- Weiwei Sun

- Željko Agić

- Yue Zhang

- Textual Inference and Other Areas of Semantics:

- Michael Roth Senior Chair

- Fabio Massimo Zanzotto Senior Chair

- Vision, Robotics, Multimodal, Grounding and Speech:

- Yoav Artzi Senior Chair

- Shinji Watanabe

- Timothy Hospedales

- Word-level Semantics:

- Ekaterina Shutova

- Roberto Navigli Senior Chair

Main Innovations

PC co-chairs mainly focused on solving the problems of review quality and reviewer workload, because they are becoming a serious issue due to a rapidly increasing number of submissions while a limited number of experienced reviewers is available.

- New structured review form (in cooperation with NAACL 2018) to address key contributions of the reviewed papers, strong arguments in favor or against, and other aspects (see also below under “Review Process”). Sample review form was made available to the community in advance: https://acl2018.org/2018/02/20/sample-review-form/

- Overall rating scale changed from 1-5 to 1-6, where 6 stands for “award-level” paper (see details below under “Review Process”).

- The role of PC chair assistants filled by several senior postdocs to manage the PC communication in a timely manner, draft documents and help the PC co-chairs during most intensive work phases.

- Each area has a Senior Area Chair responsible for decision making in the area, including assigning papers to other Area Chairs, determining final recommendations, as well as writing meta-reviews, if necessary.

- Each Area Chair is assigned around 30 papers as a meta-reviewer. They are responsible for their pool in various steps of reviewing, e.g. checking desk-reject cases, chasing late reviewers, improving review comments, leading discussions, etc. This made the responsibility of area chairs clear and the overall review process went smoothly.

- The “Multidisciplinary” area from previous years was renamed to “Multidisciplinary / also for AC COI” to make sure Area Chairs’ papers will be reviewed in this area in order to prevent any conflict of interest

- Weak PC COI (e.g., groups associated with the PC through graduate schools or project partners) were handled by the other PC. Program chairs’ research groups were not allowed to submit papers to ACL in order to prevent any COI.

- A bottom-up community-based approach for soliciting area chairs, reviewers, and invited speakers (https://acl2018.org/2017/09/06/call-for-nominations/)

- Toronto Paper Matching System (TPMS) has been used since ACL 2017, while this year we used TPMS also for assigning papers to area chairs (as a meta-reviewer) and have encouraged the community to create their TPMS profiles.

- Automatic checking of the paper format has been implemented in START. Authors were notified when a potential format violation was found during the submission process. This significantly reduced the number of desk rejects due to incidental format violations.

Submissions

An overview of statistics

- In total, 1621 submissions were received right after the submission deadline: 1045 long, 576 short papers.

- 13 erroneous submissions were deleted or withdrawn in the preliminary checks by PCs.

- 25 papers were rejected without review (16 long, 9 short); the reasons are the violation of the ACL 2018 style guideline and dual submissions.

- 32 papers were withdrawn before the review period starts; the main reason is that the papers have been accepted as the short papers at NAACL.

- In total, 1551 papers went into the reviewing phase: 1021 long, 530 short papers.

- 3 long and 4 short papers were withdrawn during the reviewing period. 1018 long and 526 short papers were considered during the acceptance decision phase.

- 258 long and 126 short papers were notified about the acceptance. 2 long and 1 short papers were withdrawn after the notification. Finally, 256 long and 125 short papers appeared in the program. The overall acceptance rate is 24.7%.

- 1610 reviewers (1473 primary, 137 secondary reviewers) were involved in the reviewing process; each reviewer has reviewed about 3 papers on average.

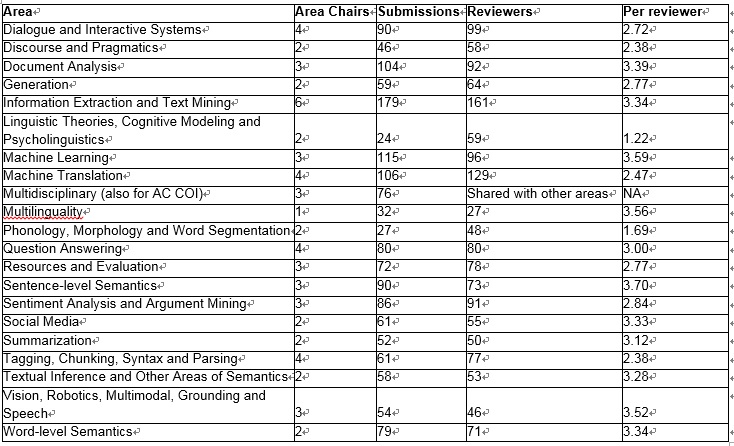

Detailed statistics by area

Review Process

Reviewing is an essential building block of a high-quality conference. Recently, the quality of reviews for ACL conferences has been increasingly questioned. However, ensuring and improving review quality is perceived as a great challenge. One reason is that the number of submissions is rapidly increasing, while the number of qualified reviewers is growing more slowly. Another reason is that members of our community are increasingly suffering from high workload, and are becoming frustrated with an ever-increasing reviewing load.

In order to address these concerns, the Program Co-Chairs of ACL 2018 carefully considered a systematic approach and implemented some changes to the review process in order to obtain as many high-quality reviews as possible at a lower cost.

Recruiting area chairs (ACs) and reviewers:

- Recruit area chairs (Sep - Oct 2017): the programme co-chairs (PCs) first decided on a list of areas and estimated the number of submissions for each area, and then proposed a short list of potential candidates of ACs in each area. Candidates who have accepted the invitation constitute the AC committee.

- Look for potential reviewers (Sep - Oct 2017): PCs sent out reviewer nomination requests in Sep 2017 to look for potential reviewers; 936 nominations were received by Nov 2017. In addition, PCs also used the reviewers list of major NLP conferences in previous one or two years and ACs nominations to look for potential reviewers. Our final list of candidates consists of over 2000 reviewers.

- Recruit reviewers (Oct - Dec 2017): the ACs use the candidate reviewers list to form the shortlist for each area and invite the reviewers whom ACs selected. 1510 candidates were invited in this first round, and ACs continued inviting reviewers when they needed.

- After the submission deadline: several areas received a significantly larger number of submissions than the estimation. PCs invited additional ACs for these areas, and also ACs invited additional reviewers as necessary. Finally, the Program Committee consists of 60 ACs and 1443 reviewers.

Assigning papers to areas and reviewers:

- First round: Initial assignments of papers to areas were determined automatically by authors’ input, while PCs went through all submissions and moved papers to other areas, considering COI and the topical fit. PCs assigned one AC as a meta-reviewer to each paper using TPMS scores.

- Second round: ACs looked into the papers in their area, and adjust meta-reviewer assignments. ACs send a report to PCs if they found problems.

- Third round: PCs made the final decision, considering workload balance, COI and the topical fit.

- Fourth round: ACs decided which reviewers will review each paper, based on AC’s knowledge about the reviewers, TPMS scores, reviewers’ bids, and COI.

Deciding on the reject-without-review papers:

- PCs went through all submissions in the first round, and then ACs looked into each paper in the second round and reported any problems.

- For each suspicious case, intensive discussions took place between PCs and the corresponding ACs, to make final decisions.

A large pool of reviewers

A commensurate number of reviewers is necessary to review our increasing number of submissions. As reported previously (see Statistics on submissions and reviewing), the Program Chairs asked the community to suggest potential reviewers. We formed a large pool of reviewers which included over 1,400 reviewers for 21 areas.

The role of the area chairs

The Program Chairs instructed area chairs to take responsibility for ensuring high-quality reviews. Each paper was assigned one area chair as a "meta-reviewer". This meta-reviewer kept track of the reviewing process and took actions when necessary, such as chasing up late reviewers, asking reviewers to elaborate on review comments, leading discussions, etc. Every area chair was responsible for around 30 papers throughout the reviewing process. The successful reviewing process of ACL 2018 owes much to the significant amount of effort by the area chairs.

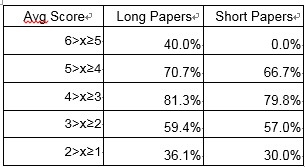

When the author response period started, 97% of all submissions had received at least three reviews, so that authors had sufficient time to respond to all reviewers' concerns. This was possible thanks to the great effort of the area chairs to chase up late reviewers. A majority of reviews described the strengths and weaknesses of the submission in sufficient detail, which helped a lot for discussions among reviewers and for decision-making by area and program chairs. (See more details below.) The area chairs were also encouraged to initiate discussions among reviewers. In total, the area chairs and reviewers posted 3,696 messages for 1,026 papers (covering 66.5% of all submissions), which shows that intensive discussions have actually taken place. The following table shows the percentages of papers that received at least one message for each range of average overall score. It is clear that papers on the borderline were discussed intensively.

Structured review form

Another important change in ACL 2018 is the structured review form, which was designed in collaboration with NAACL-HLT 2018. The main feature of this form is to ask reviewers to explicitly itemize strength and weakness arguments. This is intended…

- …for authors to provide a focused response: In the author response phase, authors are requested to respond to weakness arguments and questions. This made discussion points clear and facilitated discussions among reviewers and area chairs.

- …for reviewers and area chairs to understand strengths and weaknesses clearly: In the discussion phase, the reviewers and area chairs thoroughly discussed the strengths and weaknesses of each work. The structured reviews and author responses helped the reviewers and area chairs identify which weaknesses and strengths they agreed or disagreed upon. This was also useful for area chairs to evaluate the significance of the work for making final recommendations.

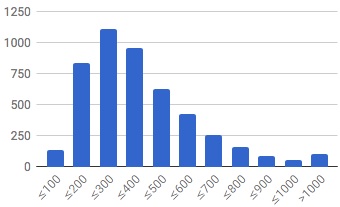

In the end, 4,769 reviews were received, 4,056 of which (85.0%) followed the structured review form. The following figure shows the distribution of word counts of all reviews. The majority of reviews had at least 200 words, which is a good sign. The average length was 380 words. We expected some more informative reviews – we estimated around 500 words would be necessary to provide strength and weakness arguments in sufficient detail – but unfortunately we found many reviews with only a single sentence for strength/weakness arguments. These were sufficient in most cases for authors and area chairs to understand the point, but improvements in this regard are still needed.

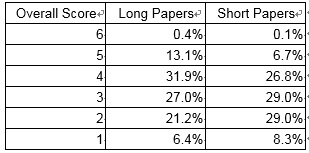

Another important change was the scale of the overall scores. NAACL 2018 and ACL 2018 departed from ACL’s traditional 5-point scale (1: clear reject, 5: clear accept) by adopting a 6-point scale (1: clear reject, ..., 4: worth accepting, 5: clear accept, 6: award-level). In the ACL 2018 reviewing instructions, it is explicitly indicated that 6 should be used exceptionally, and this was indeed what happened. (See the table below.) This had the effect of changing the semantics of scores, and, in contrast to the traditional scale, reviewers tended to give a score of 5 to more papers than in previous conferences. The following table shows the score distribution of all 4,769 reviews (not averaged scores for papers). Refer to the NAACL 2018 blog post for the statistics for NAACL 2018. The table shows that only 13.5% (long papers) and 6.8% (short papers) of reviews give “clear accepts”; more importantly, the size of the next set (those with overall score 3 or 4) was very large --- too many to include in the set of accepted papers.